Using Your Memories

Find and leverage your knowledge exactly when you need it

After creating memories in Nowledge Mem, the real power comes from accessing them at the right moment. Whether you're coding, writing, researching, or working with AI agents, Nowledge Mem ensures your past insights are always within reach.

When You Need Your Memories

Imagine these scenarios:

- During AI conversations: "What was that architectural decision we made last month about microservices?"

- While writing documentation: You need to reference a specific implementation detail you captured weeks ago

- Researching a topic: Connecting dots between insights you've gathered over time

- Starting a new project: Leveraging patterns and learnings from previous work

- Collaborating with agents: Your AI assistant autonomously recalls relevant context from your knowledge base

Nowledge Mem gives you multiple ways to access your memories, optimized for different workflows:

How to Access Your Memories

Choose the method that fits your current workflow:

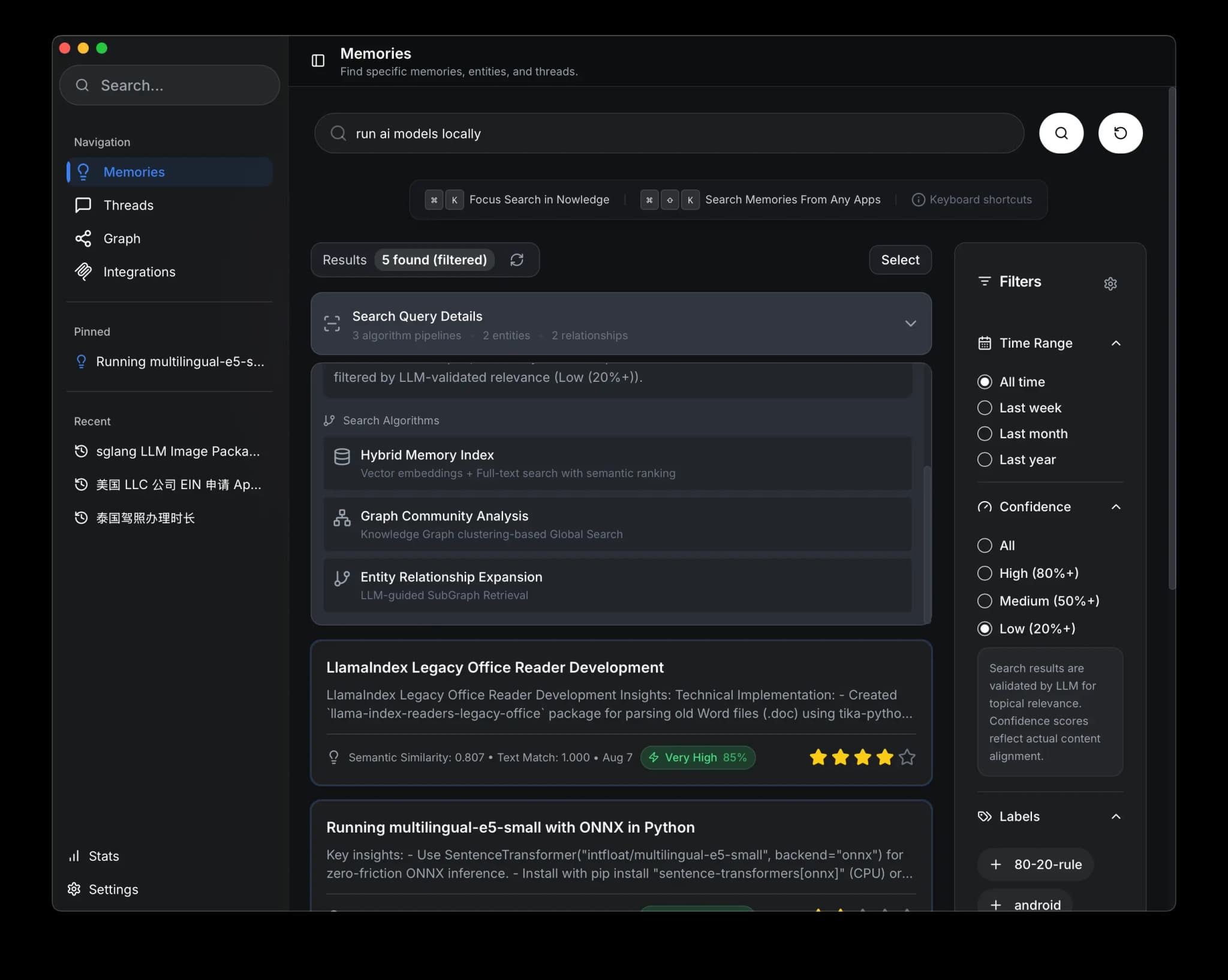

1. In-App Memory Search

When to use: Deep exploration and browsing of your knowledge base, reviewing related memories, or when you want to see the full context and connections.

The primary search interface within Nowledge Mem gives you the most powerful search capabilities with semantic understanding, keyword matching, and graph-based relationship awareness.

Search pipelines:

- Semantic Search: Finds memories by meaning, not just keywords (e.g., search "design patterns" and find memories about "architectural approaches")

- BM25 Search: Traditional keyword-based search for exact matches and specific terms

- Knowledge Graph: Discovers memories through their connections, labels, graph clustering, understanding the broader context and relationships

Quick access:

- macOS:

- ⌘ + K within the app

- ⌘ + ⇧ + Space to open memory search from anywhere

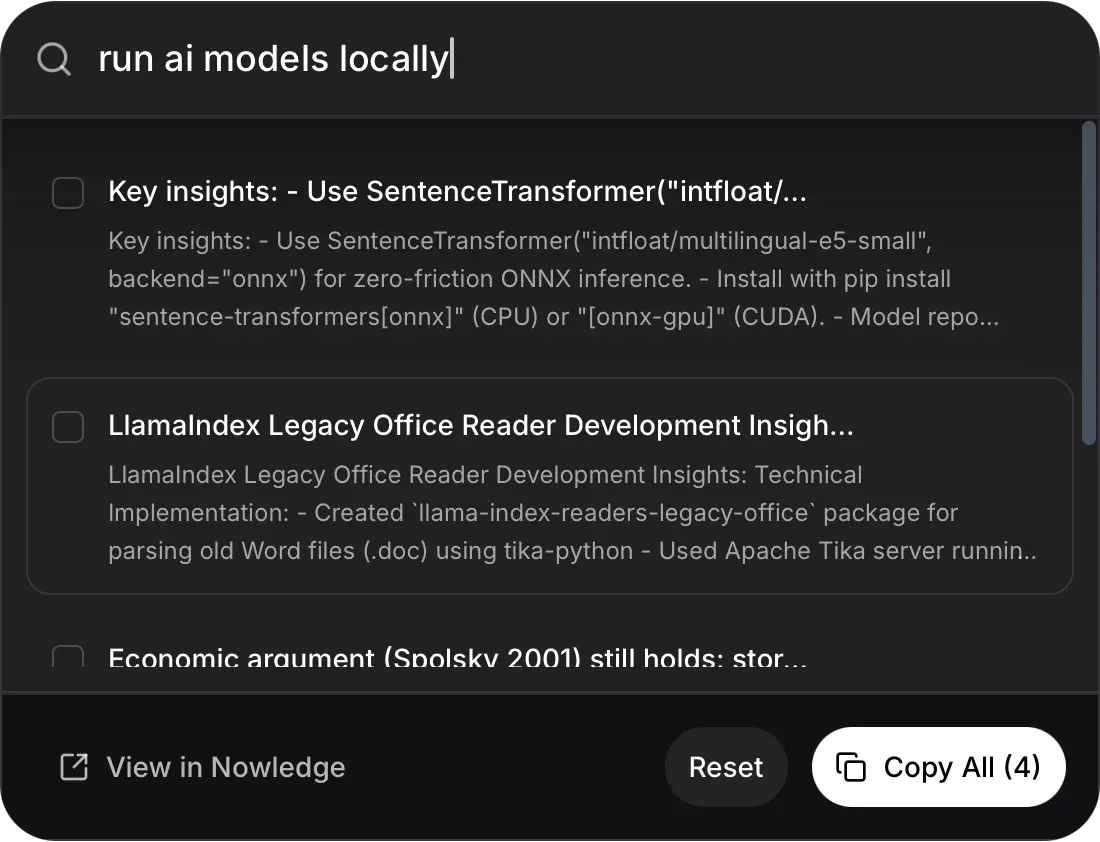

2. Global Launcher

When to use: You're in the middle of writing code, documentation, or an email and need to quickly retrieve a specific memory without switching contexts.

The global launcher is your instant access portal—invoke it from anywhere, search, and paste results directly into your current application. No context switching, no breaking your flow.

Quick access:

- macOS: ⌘ + ⇧ + K

Perfect for:

- Grabbing a code snippet while programming in your IDE

- Referencing a technical detail while writing an email

- Pasting documentation snippets into your editor

- Quickly accessing API keys, commands, or configuration examples

Real Example

You're writing code in VSCode and need that regex pattern you saved last week. Press ⌘ + ⇧ + K, type "regex email validation", select the memory, and paste it directly into your editor—all in seconds.

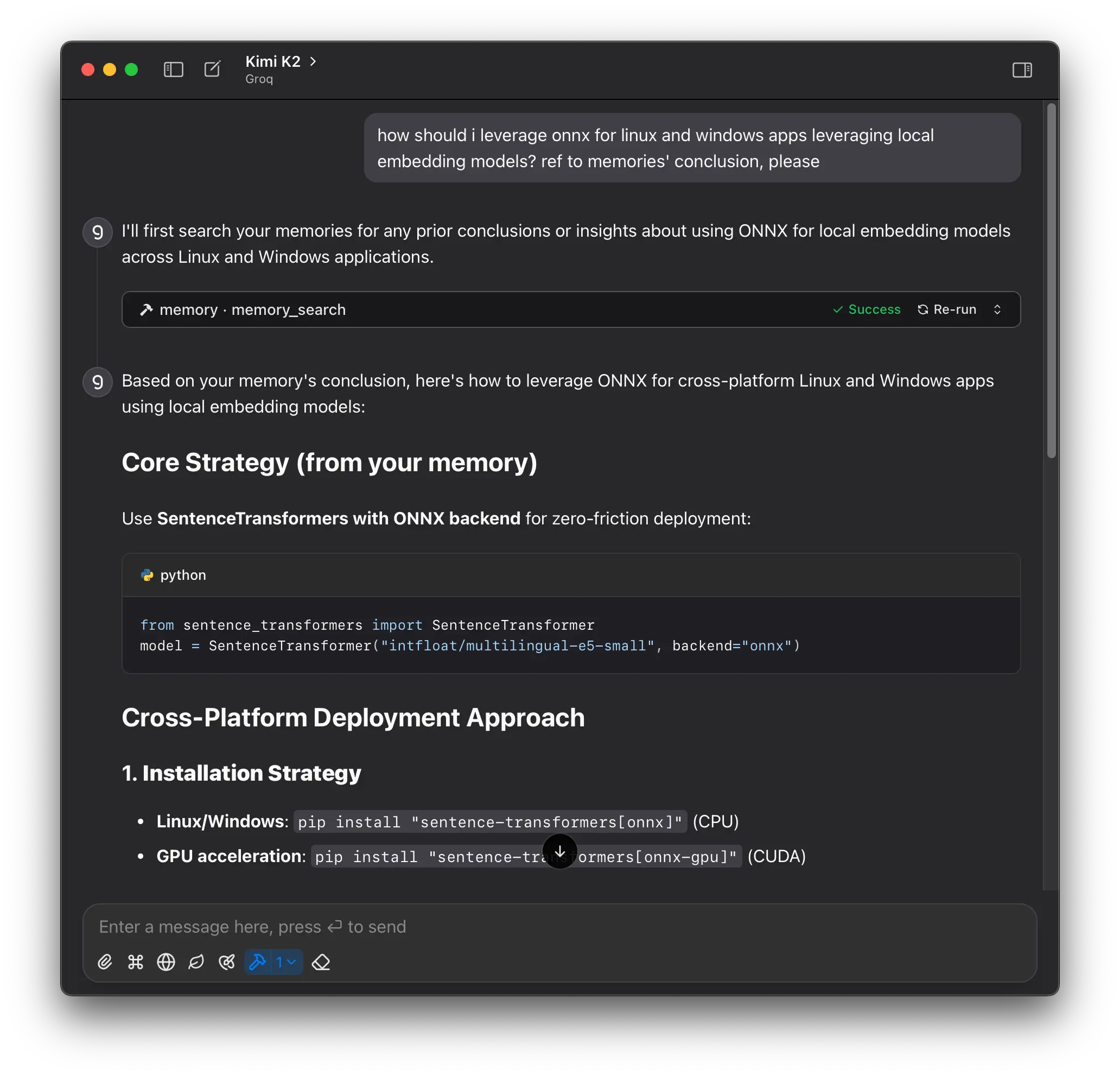

3. Agent-Based Memory Access

When to use: You're working with AI agents (Cursor, Claude, etc.) and want them to have context about your past decisions, learnings, and insights—automatically.

With MCP (Model Context Protocol) integration, your AI agents become extensions of your memory. They can autonomously search and reference your knowledge base during conversations, or you can explicitly ask them to recall specific insights.

During Conversations: Ask Your Agent

The scenario: You're chatting with Cursor about a new feature and need context from past architectural decisions.

How it works:

Your AI agent (Claude Code, Cursor, etc.) connects to Nowledge Mem via MCP

Ask questions like: "What did I learn about foo bar last month?"

The agent searches your memories and provides contextual answers based on your knowledge base

Autonomous Context Awareness

The scenario: You assign a complex task to your AI agent. Instead of starting from scratch, the agent autonomously searches your memory for relevant context, leveraging your past learnings.

This is where the magic happens—your agent proactively checks your knowledge base for relevant insights and saves important findings back to your memories, all without you asking.

How it works:

The agent recognizes it has access to your Nowledge Mem system through MCP

During task execution, it autonomously decides when to:

- Search existing memories for relevant context and patterns

- Save new insights or decisions discovered during the task

This happens seamlessly in the background—your agent builds on your accumulated knowledge automatically

Real example: You ask Cursor to "refactor the authentication module." The agent automatically searches your memories for past security decisions, finds your note about token expiration policies, and applies those learnings while maintaining consistency with your established patterns.

4. Command Line Interface (CLI)

When to use: You're in a terminal workflow, building automation scripts, or integrating with AI agents that execute shell commands.

The nmem CLI provides full access to your knowledge base from any terminal:

# Quick search

nmem m search "authentication patterns"

# JSON output for scripting

nmem --json m search "API design" | jq '.memories[0].content'Perfect for:

- Terminal-based workflows and automation

- AI agents that run shell commands (Cursor, Claude Code, etc.)

- Scripting and batch operations

- Integration with CI/CD pipelines

See CLI Integration for setup and full documentation.

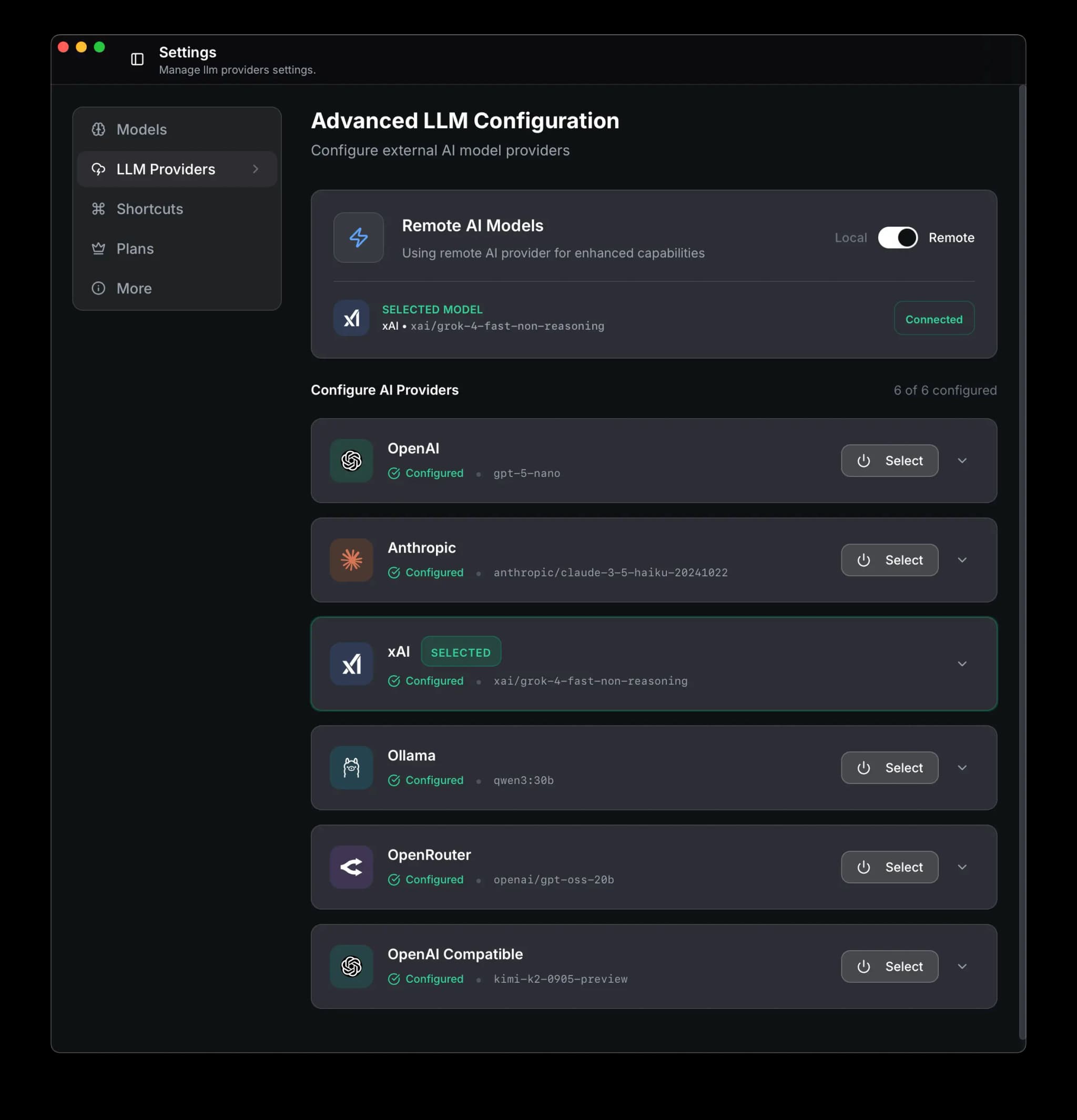

Scaling with Remote LLMs (Optional)

Default: Local, Private, Fast

By default, all search and processing happens entirely on your device:

- Zero cloud dependency - No queries sent to external servers

- Complete privacy - Your search history never leaves your machine

- Fast & offline - Works without internet connection

When You Need More Power

As your knowledge base grows or when you need more sophisticated processing, you can optionally connect your own LLM provider for enhanced capabilities.

When to consider remote LLMs:

- Your knowledge base has grown to thousands of memories

- You want faster knowledge graph extraction for large batches

- You need more nuanced semantic understanding for complex topics

- You want to leverage cutting-edge models (GPT-4, Claude Opus, etc.)

Privacy:

- In remote LLM mode, the processed data is never uploaded to any Nowledge Mem servers.

- You have full control over which LLM provider you use.

- Your queries are sent only to your chosen LLM provider, not to Nowledge Mem.

- Nowledge Mem does not log, store, or transmit your queries to any third party except the configured LLM provider.

This means you retain control of your data at all times, both when using local and remote LLM search.

Pro Feature

External BYOK (Bring Your Own Key) models require a Pro Plan.

When a remote LLM is configured and selected, Nowledge Mem will leverage it for enhanced search capabilities and automatically fall back to the local LLM if any issues occur.

During Knowledge Graph Extraction, you can choose between using your local LLM or a remote LLM for processing.

Setting Up Remote LLM

Go to Settings → Remote LLM

Toggle Remote to enable remote LLM processing

Select your preferred LLM provider from the list

Test the connection, select your desired model, and click Save

Review your configuration and confirm the selected model

Next Steps

Now that you know how to search and retrieve your memories, explore advanced capabilities:

- Advanced Features - Learn about knowledge graph augmentation and exploration

- Integrations - Connect with more AI tools and workflows